static void wblTableName(Args _args) { tableId tableId = 175; //1962; //2954; //101377; info(strFmt("%1 : %2", tableId2name(tableId), tableId2pname(tableId))); }

Friday, October 19, 2018

Get table name from its id

Wednesday, October 17, 2018

How to get all related table ids from code

We can loop all relations on a table by code.

It can be useful in cases when we need, say, to open a form with a related record.

Here you can find a more elaborated example.

static void myGetRelatedTableNames(Args _args) { myInExtCodeValueTable myInExtCodeValueTable; int mapId; TableName relatedTableName; TableId relatedTableId; Set tablesIdsSet = new Set(Types::Integer); Set tablesNamesSet = new Set(Types::String); TableId tableId = tableName2id(tableStr(myInExtCodeValueTable)); Dictionary dictionary = new Dictionary(); SysDictTable dictTable = dictionary.tableObject(tableId); DictRelation dictRelation = new DictRelation(myInExtCodeValueTable.TableId); int mapCnt = dictTable.relationCnt(); container ret ; str relationName; //create a maps of literals for all tables from the table relations // so that we could get tables names based on their ids // and if any new relation will be added to multiple external codes table // it is present automatically in this view for (mapId=1; mapId <= mapCnt; mapId++) { // elaborate if any table present many times relationName = dictTable.relation(mapId); dictRelation.loadNameRelation(relationName); if(dictRelation) { relatedTableId = dictRelation.externTable(); relatedTableName = tableId2pname(relatedTableId); tablesIdsSet.add(relatedTableId); tablesNamesSet.add(relatedTableName); info(strFmt("Table %1 - %2", relatedTableId, relatedTableName)); } } ret = [tablesIdsSet.pack(), tablesNamesSet.pack()]; }

It can be useful in cases when we need, say, to open a form with a related record.

Here you can find a more elaborated example.

Labels:

AX2012,

DictTable,

foreign key,

form,

jump,

record,

Refelctions,

relation,

table

Wednesday, September 12, 2018

Lookup and Modified methods for FormReferenceGroup fields in D365

Let's say we need to keep in Purchase line a manufacturer code for a particular product. So each Product/Manufacturer code combination is unique.

Three tables are referenced via RecId fields.

So once Manufacturer code field is placed in the form, we end up with a FormReferenceGroup.

We can easily override its lookup method by subscribing to the relevant event on it.

But what if the user wants to create new values in appropriate tables if them do not exist yet?

We can catch the modified event in order to create new values before failing the validation.

However, given that its content may be changed, it is impossible to get access to its fields at design time.

We can do it during run-time by means of getting sought field form controls by their names and overloading then their Modified() methods. (see the similar trick for AX 2012 https://alexvoy.blogspot.com/2014/01/how-to-set-properties-for-reference.html)

The code you need to add.

Table methods

Three tables are referenced via RecId fields.

So once Manufacturer code field is placed in the form, we end up with a FormReferenceGroup.

We can easily override its lookup method by subscribing to the relevant event on it.

[FormControlEventHandler(formControlStr(PurchTable, myEcoResManufacturerProduct_myEcoResManufacturerProductRecId), FormControlEventType::Lookup)] public static void myEcoResManufacturerProduct_myEcoResManufacturerProductRecId_OnLookup(FormControl sender, FormControlEventArgs e) { PurchLine purchLine = sender.formRun().dataSource(formDataSourceStr(PurchTable, PurchLine)).cursor() as PurchLine; FormControlCancelableSuperEventArgs cancelableArgs = e as FormControlCancelableSuperEventArgs; myEcoResManufacturerProduct::lookupByItem(sender, purchLine.itemId); cancelableArgs.CancelSuperCall(); }

public client static Common lookupByItem(FormReferenceControl _formReferenceControl, ItemId _itemId) { SysReferenceTableLookup sysReferenceTableLookup; Query query; QueryBuildDataSource myEcoResMan; sysReferenceTableLookup = SysReferenceTableLookup::newParameters(tableNum(myEcoResManufacturerProduct), _formReferenceControl); sysReferenceTableLookup.addLookupfield(fieldNum(myEcoResManufacturerProduct, EcoResManufacturerRecId)); sysReferenceTableLookup.addLookupfield(fieldNum(myEcoResManufacturerProduct, EcoResManufacturerPartNbr)); query = new Query(); myEcoResMan = query.addDataSource(tableNum(myEcoResManufacturerProduct)); myEcoResMan.addRange(fieldNum(myEcoResManufacturerProduct, EcoResProductRecId)).value(SysQuery::value(InventTable::find(_itemId).Product)); sysReferenceTableLookup.parmQuery(query); return sysReferenceTableLookup.performFormLookup() as myEcoResManufacturerProduct; }

But what if the user wants to create new values in appropriate tables if them do not exist yet?

We can catch the modified event in order to create new values before failing the validation.

However, given that its content may be changed, it is impossible to get access to its fields at design time.

We can do it during run-time by means of getting sought field form controls by their names and overloading then their Modified() methods. (see the similar trick for AX 2012 https://alexvoy.blogspot.com/2014/01/how-to-set-properties-for-reference.html)

The code you need to add.

[ExtensionOf(formStr(PurchTable))] final class myPurchTableForm_PurchTableManuf_Extension { private const str myFieldNameDisplayProductNumber = 'EcoResManufacturerPartNbr'; private const str myFieldNameEcoResManufacturerName = 'EcoResManufacturerName'; private FormStringControl myFSCDisplayProductNumber; private FormStringControl myFSCEcoResManufacturerName; // the only way to change the standard modified method for a control inside of a dynamically populated reference group // is to get it by its name looping all form controls of this group during run-time. then to overload it [FormEventHandler(formStr(PurchTable), FormEventType::Initialized)] public void PurchTable_OnInitialized(xFormRun sender, FormEventArgs e) { FormDesign formDesign = sender.design(); FormReferenceGroupControl formReferenceGroupControl; formReferenceGroupControl = formDesign.controlName(formControlStr(PurchTable, myEcoResManufacturerProduct_myEcoResManufacturerProductRecId)) as formReferenceGroupControl; this.registerManufacturerNameOverload(formReferenceGroupControl); } private void registerManufacturerNameOverload(FormReferenceGroupControl _formReferenceGroupControl ) { int i; Object childControl; FormStringControl formStringControl; for (i = 1; i <= _formReferenceGroupControl.controlCount(); i++) // FilterCategory is of FormReferenceGroupControl type { childControl = _formReferenceGroupControl.controlNum( i ); formStringControl = childControl as formStringControl; if(formStringControl.DataFieldName() == myFieldNameEcoResManufacturerName) { myFSCEcoResManufacturerName = formStringControl; formStringControl.registerOverrideMethod(methodStr(formStringControl, modified), formMethodStr(PurchTable, myEcoResManufacturerName_modified_overload), this); } if(formStringControl.DataFieldName() == myFieldNameDisplayProductNumber) { myFSCDisplayProductNumber = formStringControl; formStringControl.registerOverrideMethod(methodStr(formStringControl, modified), formMethodStr(PurchTable, myDisplayProductNumber_modified_overload), this); } } } /// <summary> /// We have to allow the user to insert any value, even though such a value is not found in the referenced table; /// then we will ask whether this new value must be created in the table and set this new value for the current record /// </summary> /// <param name = "_sender">EcoResManufacturerName</param> public void myEcoResManufacturerName_modified_overload(FormStringControl _sender) { myEcoResManufacturer myEcoResManufacturer = myEcoResManufacturer::findOrCreateByName(_sender.text()); _sender.modified(); } /// <summary> /// We have to allow the user to insert any value, even though such a value is not found in the referenced table; /// then we will ask whether this new value must be created in the table and set this new value for the current record /// </summary> /// <param name = "_sender">EcoResManufacturerName</param> public void myDisplayProductNumber_modified_overload(FormStringControl _sender) { Common comm = _sender.dataSourceObject().cursor(); PurchLine purchLine = _sender.parentControl().dataSourceObject().cursor() as PurchLine; myEcoResManufacturerProduct myEcoResManufacturerProduct = myEcoResManufacturerProduct::findOrCreateEcoResManufacturerProduct( purchLine.itemId, myFSCEcoResManufacturerName.text(), _sender.text()); _sender.modified(); } }

Table methods

public static myEcoResManufacturer findOrCreateByName(myEcoResManufacturerName _manufacturerName) { myEcoResManufacturer myEcoResManufacturer = myEcoResManufacturer::findByName(_manufacturerName); if(!avrEcoResManufacturer) { if(Box::confirm("Do you want to creare new manufacturer?", strFmt(myEcoResManufacturer::txtNotExist(), _manufacturerName))) { try { myEcoResManufacturer.EcoResManufacturerName = _manufacturerName; if(myEcoResManufacturer.validateWrite()) { myEcoResManufacturer.insert(); } } catch { Error("Failed to create new manufacturer"); } } } return myEcoResManufacturer; }

public static myEcoResManufacturerProduct findOrCreateEcoResManufacturerProduct(ItemId _itemId, myEcoResManufacturerName _manufacturerName, myEcoResManufacturerPartNbr _manufacturerPartNbr) { myEcoResManufacturerProduct myEcoResManufacturerProduct; myEcoResManufacturer myEcoResManufacturer; InventTable inventTable = InventTable::find(_itemId); // item and part number are given and exist if(inventTable.Product && _manufacturerPartNbr) { // such a manufacturer exists, so just try to find it for given combination myEcoResManufacturer = myEcoResManufacturer::findOrCreateByName(_manufacturerName); myEcoResManufacturerProduct = myEcoResManufacturerProduct::find(inventTable.Product, myEcoResManufacturer.RecId); if(!myEcoResManufacturerProduct) { if(Box::confirm("Do you want to create new part number", strFmt(myEcoResManufacturerProduct::txtNotExist(), _manufacturerPartNbr))) { try { myEcoResManufacturerProduct.EcoResProductRecId = inventTable.Product; myEcoResManufacturerProduct.EcoResManufacturerRecId = myEcoResManufacturer.RecId; myEcoResManufacturerProduct.EcoResManufacturerPartNbr = _manufacturerPartNbr; myEcoResManufacturerProduct.EcoResManufacturerDefault = NoYes::Yes; if(myEcoResManufacturerProduct.validateWrite()) { myEcoResManufacturerProduct.insert(); } } catch { Error("Failed to create new part number"); } } } } return myEcoResManufacturerProduct; }

Labels:

D365,

Event handler,

Extension,

FormReferenceGroup,

overload

Friday, August 17, 2018

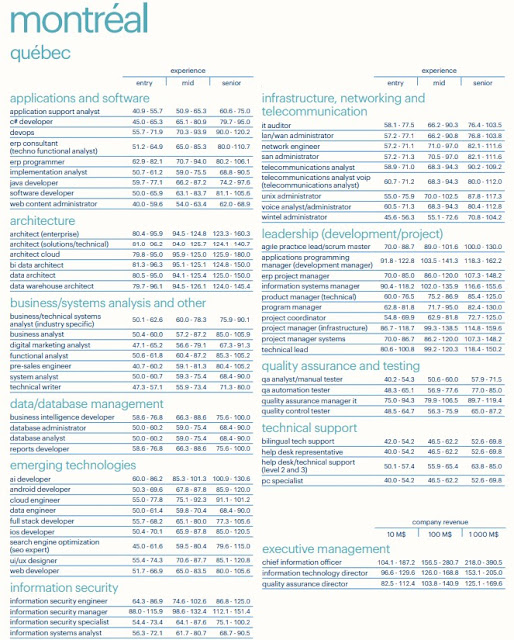

TECH SALARIES IN TORONTO, MONTREAL, VANCOUVER REVEALED (2018)

KOVASYS have published a short article on IT salaries in Canadian cities based on Randstad report; it is worth reading.

For example, about Montreal

For example, about Montreal

Tuesday, July 31, 2018

MICROSOFT DYNAMICS SALARY SURVEY 2018 by Nigel Frank

Yeah! They did it again! Tenth time in a row!

I entered Microsoft Dynamics world ten years ago - almost at the same time when Nigel Frank started this great project. I always believed that knowing others salary can help us - hired workers and freelancers - better position ourselves on the market. More than 14000 data respondents make this survey report a valuable guide for those wondering about modern trends.

It is interesting how consultant and developer have been racing these years. It happens probably because of Microsoft efforts to ease development by moving to Visual Studio and to add more and more complex functionality to their products.

Once I got my Microsoft Dynamics AX certification, I was told that this is mutually beneficial to me and to the company and cannot be a reason for my salary increase. As we can see it happens in 70% of cases.

At the same time the most reasons why specialists start flirting with the idea to change their current job are lack of salary increase (57%) and lack of promotional perspectives (54%). A good point to think about!

More than a half of respondents said being ready to consider to relocate even to another country! And, yes, Canada is the destination number one!

Now, being a father of two toddlers, I really appreciate that I am allowed to work from home: until recent days it was three days a week.

As in previous years, Dynamics AX is still in the top 3 all together with CRM and NAV. Let's take a look at USA and Canada salaries.

I have made my notes just about a few points, but you can find a lot of other interesting analytics provided by Nigel Frank in this report. Great job!

As an improvement suggestion, it would be amazing to get time line trends by other Microsoft products as well as by region, occupancy etc. They already got all needed data; therefore, it is just a few hours of Microsoft Power BI developer work! :)

previous post about the report

I entered Microsoft Dynamics world ten years ago - almost at the same time when Nigel Frank started this great project. I always believed that knowing others salary can help us - hired workers and freelancers - better position ourselves on the market. More than 14000 data respondents make this survey report a valuable guide for those wondering about modern trends.

It is interesting how consultant and developer have been racing these years. It happens probably because of Microsoft efforts to ease development by moving to Visual Studio and to add more and more complex functionality to their products.

Once I got my Microsoft Dynamics AX certification, I was told that this is mutually beneficial to me and to the company and cannot be a reason for my salary increase. As we can see it happens in 70% of cases.

At the same time the most reasons why specialists start flirting with the idea to change their current job are lack of salary increase (57%) and lack of promotional perspectives (54%). A good point to think about!

More than a half of respondents said being ready to consider to relocate even to another country! And, yes, Canada is the destination number one!

Now, being a father of two toddlers, I really appreciate that I am allowed to work from home: until recent days it was three days a week.

As in previous years, Dynamics AX is still in the top 3 all together with CRM and NAV. Let's take a look at USA and Canada salaries.

I have made my notes just about a few points, but you can find a lot of other interesting analytics provided by Nigel Frank in this report. Great job!

As an improvement suggestion, it would be amazing to get time line trends by other Microsoft products as well as by region, occupancy etc. They already got all needed data; therefore, it is just a few hours of Microsoft Power BI developer work! :)

previous post about the report

Tuesday, July 24, 2018

Wednesday, July 18, 2018

Wednesday, June 6, 2018

Extensions and Edit and Display methods declaration in D365

Just a short note for my current PU.

Display methods work well as instance methods from table extensions. As to edit-methods, we still need to declare them as static.

Do not forget to pass the table buffer as the first argument.

More detail can be found in Vania's article about news in PU11.

Display methods work well as instance methods from table extensions. As to edit-methods, we still need to declare them as static.

[ExtensionOf(tableStr(ProjProposalJour))] final class myProjProposalJourTable_ProjInvReport_Extension static public server edit myProjInvReportFmtDescWithBr myEditInvReportFormatWithBR(ProjProposalJour _this, boolean _set, PrintMgmtReportFormatDescription _newReportFormat) { PrintMgmtReportFormatDescription newReportFormat = _newReportFormat; PrintMgmtReportFormatDescription reportFormat; reportFormat = ProjInvoicePrintMgmt::myGetReportFormatWithBR(_this); if (_set) { if (_this.RecId && newReportFormat && newReportFormat != reportFormat) { ProjInvoicePrintMgmt::myCreateOrUpdateInvoiceWithBRPrintSettings(_this, PrintMgmtNodeType::ProjProposalJour, newReportFormat); reportFormat = newReportFormat; } } return reportFormat; }

Do not forget to pass the table buffer as the first argument.

More detail can be found in Vania's article about news in PU11.

How to add a new report for a business document in D365

My colleague Rod showed it to me when I needed to add a new report to Project Invoice Proposal with billing rules to be present in Print management settings.

Then I can pick it up in Print management settings in Project module.

It may be a good idea to delete records from PrintMgmtReportFormat table; all its records will be recreated the next time you open Print management form.

/// <summary> /// Subscribes to print mgmt report format publisher to populate custom reports /// </summary> [SubscribesTo(classstr(PrintMgmtReportFormatPublisher), delegatestr(PrintMgmtReportFormatPublisher, notifyPopulate))] public static void notifyPopulate() { #PrintMgmtSetup void addFormat(PrintMgmtDocumentType _type, PrintMgmtReportFormatName _name, PrintMgmtReportFormatCountryRegionId _countryRegionId = #NoCountryRegionId) { myPrintMgtDocType_ProjInvReport_Handler::addPrintMgmtReportFormat(_type, _name, _name, _countryRegionId); } addFormat(PrintMgmtDocumentType::myProjInvoiceWithBR, ssrsReportStr(myPSAContractLineInvoice, Report)); } /// <summary> /// Adds a report format to the printMgtReportFormat table /// </summary> /// <param name = "_type">PrintMgmtDocumentType value</param> /// <param name = "_name">Name of the report (ie. reportname.Report)</param> /// <param name = "_description">Description of the report (ie. reportname.Report)</param> /// <param name = "_countryRegionId">Country or default (#NoCountryRegionId)</param> /// <param name = "_system">True if this is a system report</param> /// <param name = "_ssrs">SSRS report or another type</param> private static void addPrintMgmtReportFormat( PrintMgmtDocumentType _type, PrintMgmtReportFormatName _name, PrintMgmtReportFormatDescription _description, PrintMgmtReportFormatCountryRegionId _countryRegionId, PrintMgmtReportFormatSystem _system = false, PrintMgmtSSRS _ssrs = PrintMgmtSSRS::SSRS) { PrintMgmtReportFormat printMgmtReportFormat; select firstonly printMgmtReportFormat where printMgmtReportFormat.DocumentType == _type && printMgmtReportFormat.Description == _description && printMgmtReportFormat.CountryRegionId == _countryRegionId; if (!printMgmtReportFormat) { // Add the new format printMgmtReportFormat.clear(); printMgmtReportFormat.DocumentType = _type; printMgmtReportFormat.Name = _name; printMgmtReportFormat.Description = _description; printMgmtReportFormat.CountryRegionId = _countryRegionId; printMgmtReportFormat.System = _system; printMgmtReportFormat.ssrs = _ssrs; printMgmtReportFormat.insert(); } }

Then I can pick it up in Print management settings in Project module.

It may be a good idea to delete records from PrintMgmtReportFormat table; all its records will be recreated the next time you open Print management form.

Labels:

business document,

D365,

print management,

project,

report,

SSRS

Thursday, May 24, 2018

Simple form for Financial dimension value set lookup

Simple form for Financial dimension lookup.

On any form a consultant can add Fin dim field, which is actually just a rec id, then click on it to see the real value set. (Added FormRef to DimensionAttributeValueSet table.)

If opened as a separate window, the form allows to lookup any fin dim value.

Download AX 2012 XPO file.

On any form a consultant can add Fin dim field, which is actually just a rec id, then click on it to see the real value set. (Added FormRef to DimensionAttributeValueSet table.)

If opened as a separate window, the form allows to lookup any fin dim value.

Download AX 2012 XPO file.

Friday, March 9, 2018

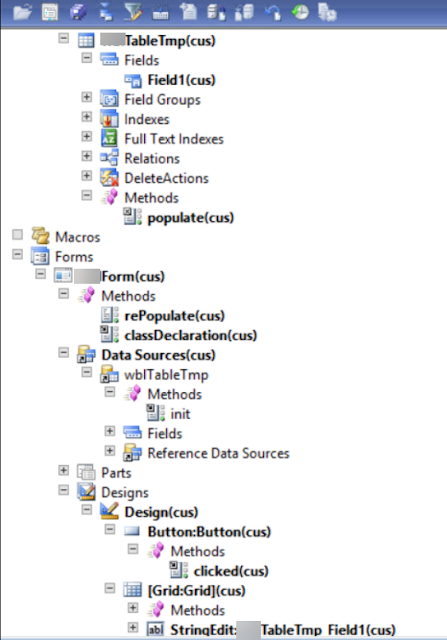

TempDB table on a form with multiple updates

If you, like me, are still trying to understand how to use a TempDB table in a form and update it as many times as you need, or you are getting the error

"Cannot execute the required database operation.The method is only applicable to TempDB table variables that are not linked to existing physical table instance."

then you would better read the following short explanation.

Let's say your tempDB table is meant to be populated by request from a form on the server side.

In the form data source Init() we just initialize another tempDB buffer of the same type and link its physical instance to the current data source buffer.

Any time you need to update its content, just re-populate it in a method by providing the linked temporary buffer from the form.

Populating method defined on the table, for example.

All credits for this trick are for Iulian Cordobin.

"Cannot execute the required database operation.The method is only applicable to TempDB table variables that are not linked to existing physical table instance."

then you would better read the following short explanation.

Let's say your tempDB table is meant to be populated by request from a form on the server side.

In the form data source Init() we just initialize another tempDB buffer of the same type and link its physical instance to the current data source buffer.

public void init() { super(); myTableTmpLocal.doInsert(); delete_from myTableTmpLocal; //myTableTmp::populate(myTableTmpLocal); // <-- no need at this step! // if you need to populate it here by default, then comment the two previous lines myTableTmp.linkPhysicalTableInstance(myTableTmpLocal); }

Any time you need to update its content, just re-populate it in a method by providing the linked temporary buffer from the form.

void clicked() { super(); element.rePopulate(); }

public void rePopulate() { myTableTmp::populate(myTableTmpLocal); myTableTmp.linkPhysicalTableInstance(myTableTmpLocal); myTableTmp_DS.research(); }

Populating method defined on the table, for example.

static server void populate(myTableTmp _myTableTmp) { int k; delete_from _myTableTmp; //<-- important to not have duplicates! for (k = 1; k<=4; k++) { _myTableTmp.Field1 = int2str(k); _myTableTmp.insert(); } }

All credits for this trick are for Iulian Cordobin.

Thursday, March 1, 2018

AX 2012 R3 CU13 Bug: DMF Bundle batch processing misses records

If you load many records via DMF, the most probable scenario is that you would divide them by setting a number of threads.

Unfortunately, there is a bug with it in the CU13.

When this batch job ends you will find 8*1041 records processed only.

As you can see the records ids are calculated correctly but the processing method gets wrong variables instead.

Down in the code, the batch task itself is set for a wrong number of records, too.

This is how to fix this bug.

There are two lessons we can take from this story:

- do not touch the code if it works;

- use really good names for your variables (it is not strong-typed environment!)

Unfortunately, there is a bug with it in the CU13.

When this batch job ends you will find 8*1041 records processed only.

As you can see the records ids are calculated correctly but the processing method gets wrong variables instead.

Down in the code, the batch task itself is set for a wrong number of records, too.

This is how to fix this bug.

There are two lessons we can take from this story:

- do not touch the code if it works;

- use really good names for your variables (it is not strong-typed environment!)

AX 2012 R3 CU13 Bug: DMF default value mapping for String type

There is bug in AX 2012 R3 CU13.

If you check Default type for your data entity staging mapping field of String type, then the system tries to find any first record matching to "Default" value as XML name to get the linked field and compare against its string length.

To fix it, we need to make the following changes in three methods of the form DMFStagingDefaultTable

and on the sole form data source field StagingStrValue

If you check Default type for your data entity staging mapping field of String type, then the system tries to find any first record matching to "Default" value as XML name to get the linked field and compare against its string length.

To fix it, we need to make the following changes in three methods of the form DMFStagingDefaultTable

public class FormRun extends ObjectRun { SysDictField dictField; DMFFieldType dmfFieldType; DMFSourceXMLToEntityMap dmfSourceXMLToEntityMap; Types valueType; // Begin: Alexey Voytsekhovskiy int myMaxStrSize; // End: Alexey Voytsekhovskiy }

public void init() { Args args; DMFEntity entity; DMFDefinitionGroupEntityXMLFields dmfDefinitionGroupEntityXMLFields; SysDictType dictType; #define.Integer('Integer') #define.String('String') #define.RealMacro('Real') #define.DateMacro('Date') #define.DateTimeMacro('UtcDateTime') #define.Time('Time') super(); if (!element.args() || element.args().dataset() != tableNum(DMFSourceXMLToEntityMap)) { throw error(strfmt("@SYS25516", element.name())); } args = element.args(); dmfSourceXMLToEntityMap = args.record(); // Begin: Alexey Voytsekhovskiy select firstOnly FieldType, FieldSize from dmfDefinitionGroupEntityXMLFields where dmfDefinitionGroupEntityXMLFields.FieldName == dmfSourceXMLToEntityMap.EntityField && dmfDefinitionGroupEntityXMLFields.Entity == dmfSourceXMLToEntityMap.Entity && dmfDefinitionGroupEntityXMLFields.DefinitionGroup == dmfSourceXMLToEntityMap.DefinitionGroup ; myMaxStrSize = dmfDefinitionGroupEntityXMLFields.FieldSize ? dmfDefinitionGroupEntityXMLFields.FieldSize : 256; // End: Alexey Voytsekhovskiy if (dmfDefinitionGroupEntityXMLFields.FieldType && !dmfSourceXMLToEntityMap.IsAutoDefault) { dmfFieldType = dmfDefinitionGroupEntityXMLFields.FieldType; if (dmfsourceXMLToEntityMap.EntityField) { entity = DMFEntity::find(dmfsourceXMLToEntityMap.Entity); dictField = new SysDictField(tableName2id(entity.EntityTable), fieldName2id(tableName2Id(entity.EntityTable),dmfsourceXMLToEntityMap.EntityField)); } switch(dmfDefinitionGroupEntityXMLFields.FieldType) { case #Integer: DMFStagingConversionTable_StagingIntValue.visible(true); break; case #String: DMFStagingConversionTable_StagingStrValue.visible(true); break; case #RealMacro: DMFStagingConversionTable_StagingRealValue.visible(true); break; case #DateMacro: DMFStagingConversionTable_StagingDateValue.visible(true); break; case #DateTimeMacro: DMFStagingConversionTable_StagingUtcDataTime.visible(true); break; case #Time: DMFStagingConversionTable_StagingTimeValue.visible(true); break; default: warning("@DMF694"); element.close(); } } else if(dmfSourceXMLToEntityMap.IsAutoDefault) { entity = DMFEntity::find(dmfsourceXMLToEntityMap.Entity); dictField = new SysDictField(tableName2id(entity.EntityTable),fieldName2id(tableName2Id(entity.EntityTable),dmfsourceXMLToEntityMap.EntityField)); dictType = new SysDictType(dictField.typeId()); if (dictType && dictType.isTime()) { valueType = Types::Time; } else { valueType = dictField.baseType(); } dmfFieldType = enum2str(dictField.baseType()); switch(valueType) { case Types::Integer: DMFStagingConversionTable_StagingIntValue.visible(true); break; case Types::String: DMFStagingConversionTable_StagingStrValue.visible(true); break; case Types::Real: DMFStagingConversionTable_StagingRealValue.visible(true); break; case Types::Date: DMFStagingConversionTable_StagingDateValue.visible(true); break; case Types::UtcDateTime: DMFStagingConversionTable_StagingUtcDataTime.visible(true); break; case Types::Time: DMFStagingConversionTable_StagingTimeValue.visible(true); break; case Types::Guid: DMFStagingConversionTable_StagingGuidValue.visible(true); break; default: warning("@DMF694"); element.close(); } } else { element.close(); } }

and on the sole form data source field StagingStrValue

public boolean validate() { boolean ret; DMFDefinitionGroupEntityXMLFields dmfDefinitionGroupEntityXMLFields; ret = super(); if(ret) { // Begin: Alexey Voytsekhovskiy if(strLen(DMFStagingConversionTable.StagingStrValue) > myMaxStrSize) { throw error("@DMF788"); } // select firstOnly FieldSize from dmfDefinitionGroupEntityXMLFields // where dmfDefinitionGroupEntityXMLFields.FieldName == dmfSourceXMLToEntityMap.XMLField; // // if(dmfDefinitionGroupEntityXMLFields.FieldSize) // { // if(strLen(DMFStagingConversionTable.StagingStrValue) > dmfDefinitionGroupEntityXMLFields.FieldSize) // { // throw error("@DMF788"); // } // } // End: Alexey Voytsekhovskiy } return ret; }

Saturday, February 10, 2018

How to update progress in DMF batch tasks

I do not know why but standard DMF writer does not update its batch task progress. It is really dull to run DMF target step and see 0% progress for long time trying to guess only what ETA is.

Fortunately it is easy to fix. The whole idea is simple: init a server side progress and update it every time the next record is processed.

DMFEntityWriter class

Fortunately it is easy to fix. The whole idea is simple: init a server side progress and update it every time the next record is processed.

DMFEntityWriter class

private void myProgressServerInit(RefRecId _startRecId, RefRecId _endRecId) { if(xSession::isCLRSession()) { // this progress just updates percentage in Batch task form myProgressServer = RunbaseProgress::newServerProgress(1, newGuid(), -1, DateTimeUtil::minValue()); myProgressServer.setTotal(_endRecId - _startRecId); } }

public container write(DMFDefinitionGroupExecution _definitionGroupExecution, DMFdefinationGroupName _definitionGroup, DMFExecutionID _executionId, DMFEntity _entity, boolean _onlyErrored, boolean _onlySelected, RefRecId _startRefRecId = 0, RefRecId _endRefRecId = 0, boolean _isCompare = false, DmfStagingBundleId _bundleId = 0) { Common target; ... if (_entity.TargetIsSetBased) { ... } else { ... while (nextStartRecId <= lastRecId) { ... try { this.myProgressServerInit(startRefRecId, endRefRecId); ... while select staging where staging.(defGroupFieldId) == _definitionGroup && staging.(execFieldId) == _executionId && ( (!_onlyErrored && !_onlySelected) || (_onlyErrored && staging.(transferStatusFieldId) == DMFTransferStatus::Error) || (_onlySelected && staging.(selectedFieldId) == NoYes::Yes) ) join tmpStagingRecords where tmpStagingRecords.RecId >= startRefRecId && tmpStagingRecords.RecId <= endRefRecId && tmpStagingRecords.StagingRecordRecId == staging.RecId { try { this.myProgressServerIncCount(); ... } catch { ... } } } this.myProgressServerKill(); } ... return [newCount, updCount, stagingLogRecId,msgDisplayed]; }

Why my batch job is still waiting?

You probably have compilation errors in CIL. Try to recompile full CIL.

Tuesday, February 6, 2018

Why your method is still not running in CIL

Please do not forget about the third parameter when you call your CIL wrapper.

It won't run in CIL if the current transaction level is not equal to zero, like in the following example with the standard import of Zip codes.

However, you can try to force ignoring TTS level at your own risk.

It works perfectly for my scenario.

This is how to check if your code is running in CIL.

It won't run in CIL if the current transaction level is not equal to zero, like in the following example with the standard import of Zip codes.

However, you can try to force ignoring TTS level at your own risk.

It works perfectly for my scenario.

This is how to check if your code is running in CIL.

Friday, February 2, 2018

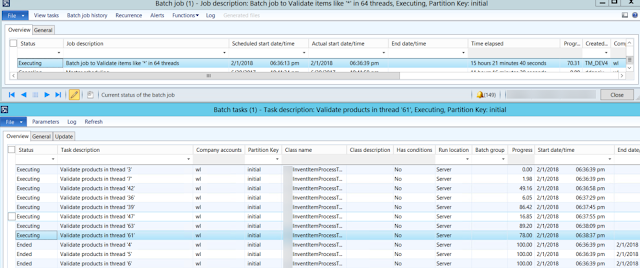

Parallel Items/Product Validating or Deleting

If you need to validate or delete items/products or any other records in a BIG number, it is better to run such processing, first, in CIL, second in parallel threads.

This project is to demonstrate this approach.

The whole concept is similar to what I explained in one of my previous blogpost about Multi thread parallelism and a dispatching table for finding a minimum

myInventItemProcessBatch class populates a special table containing RecIds to be processed and thread number they belong to.

Based on the user selection, it creates appropriate number of batch tasks that can run independently with their progress percentage.

Feel free to elaborate this project by adding new types of processing or new table to process. Also it is probably a good idea to add a new column to the table to separate different instances myInventItemProcessBatch simultaneously running in the same environment.

myInventItemProcessBatch

myInventItemProcessTask process()

This project is to demonstrate this approach.

The whole concept is similar to what I explained in one of my previous blogpost about Multi thread parallelism and a dispatching table for finding a minimum

myInventItemProcessBatch class populates a special table containing RecIds to be processed and thread number they belong to.

Based on the user selection, it creates appropriate number of batch tasks that can run independently with their progress percentage.

Feel free to elaborate this project by adding new types of processing or new table to process. Also it is probably a good idea to add a new column to the table to separate different instances myInventItemProcessBatch simultaneously running in the same environment.

myInventItemProcessBatch

private static server int64 populateItems2Process(str 20 _what2find, int _batchThreads) { myInventItemProcessTable myInventItemProcessTable; InventTable inventTable; int firstThread = 1; Counter countr; // flush all previously created items from the table delete_from myInventItemProcessTable; // insert all needed items in one shot. this part can be refactored to use Query instead insert_recordset myInventItemProcessTable (threadNum, ItemRecId, ItemId) select firstThread, RecId, ItemId from InventTable where inventTable.itemId like _what2find; // now group them in threads by simply enumerating them from 1 to N countr=1; ttsBegin; while select forUpdate myInventItemProcessTable { myInventItemProcessTable.threadNum = countr; myInventItemProcessTable.update(); countr++; if(countr > _batchThreads) { countr=1; } } ttsCommit; // return the total number of items to process select count(RecId) from myInventItemProcessTable; return myInventItemProcessTable.RecId; }

public void run() { // get all required items by their RecIds in the table and group them in threads int64 totalRecords = myInventItemProcessBatch::populateItems2Process(what2find, batchThreads); if(totalRecords) { info(strFmt("Found %1 items like '%2' to %3", totalRecords, what2find, processType)); // create number of batch tasks to parallel processing this.scheduleBatchJobs(); } else { warning(strFmt("There are no items like '%1'", what2find)); } }

...

select count(RecId) from inventTable exists join myInventItemProcessTable where myInventItemProcessTable.ItemRecId == inventTable.RecId && myInventItemProcessTable.threadNum == threadNum; // total number of lines to be processed totalLines = inventTable.reciD; // to enjoy our bored user during a few next hours // this progress just updates percentage in Batch task form progressServer = RunbaseProgress::newServerProgress(1, newGuid(), -1, DateTimeUtil::minValue()); progressServer.setTotal(totalLines); while select inventTable exists join myInventItemProcessTable where myInventItemProcessTable.ItemRecId == inventTable.RecId && myInventItemProcessTable.threadNum == threadNum { progressServer.incCount(); try { // RUN YUR LOGIC HERE //////////////////////

...

Labels:

batch processing,

CIL,

inventTable,

overload,

parallel computing

Friday, January 5, 2018

AX 2012 Wizard does not update Analysis Serivce Project: workaround

Recently I bumped into a strange issue in AX 2012, which prevents importing Analysis Services Projects to AOT.

Given that this import is an essential part of cubes development and deployment, I decided to find a way to get this thing done.

The issue is in the fact that nevertheless all changes made to your perspective are successfully present in the Wizard tree, they are never saved back to AOT. Therefore, the previous version of your project is always deployed to SQL, no matter what you try to achieve.

The workaround is pretty simple. Make up your perspective, run the Wizard and let it finish its job. Make Deploy option unchecked because it is pointless.

Once the Wizard window is closed, just find your project in the node of Analysis Services Projects and delete it.

Then find a recently created folder in your TEMP directory; this one must contain your recently added artifacts, say, financial dimensions as depicted.

Here we go.

Now just import this particular project back to AOT, and run the Wizard again to deploy the projects.

It is also worth double-checking the project content in Visual Studio before running the Wizard for the second time.

If you missed the target, find the right folder with your added/changed objects.

Now the Wizard should find no changes and just deploy it.

Given that this import is an essential part of cubes development and deployment, I decided to find a way to get this thing done.

The issue is in the fact that nevertheless all changes made to your perspective are successfully present in the Wizard tree, they are never saved back to AOT. Therefore, the previous version of your project is always deployed to SQL, no matter what you try to achieve.

The workaround is pretty simple. Make up your perspective, run the Wizard and let it finish its job. Make Deploy option unchecked because it is pointless.

Once the Wizard window is closed, just find your project in the node of Analysis Services Projects and delete it.

Then find a recently created folder in your TEMP directory; this one must contain your recently added artifacts, say, financial dimensions as depicted.

Here we go.

Now just import this particular project back to AOT, and run the Wizard again to deploy the projects.

It is also worth double-checking the project content in Visual Studio before running the Wizard for the second time.

If you missed the target, find the right folder with your added/changed objects.

Now the Wizard should find no changes and just deploy it.

Subscribe to:

Comments (Atom)

_1074.jpg)